A Sparse Sampling-based framework for Semantic Fast-Forward of First-Person Videos

2021 IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)

Abstract

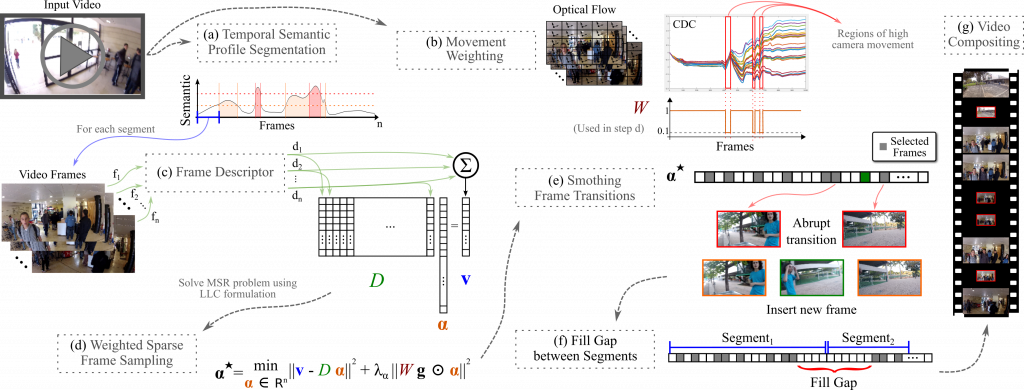

Technological advances in sensors have paved the way for digital cameras to become increasingly ubiquitous, which, in turn, led to the popularity of the self-recording culture. As a result, the amount of visual data on the Internet is moving in the opposite direction of the available time and patience of the users. Thus, most of the uploaded videos are doomed to be forgotten and unwatched stashed away in some computer folder or website. In this paper, we address the problem of creating smooth fast-forward videos without losing the relevant content. We present a new adaptive frame selection formulated as a weighted minimum reconstruction problem. Using a smoothing frame transition and filling visual gaps between segments, our approach accelerates first-person videos emphasizing the relevant segments and avoids visual discontinuities. Experiments conducted on controlled videos and also on an unconstrained dataset of First-Person Videos (FPVs) show that, when creating fast-forward videos, our method is able to retain as much relevant information and smoothness as the state-of-the-art techniques, but in less processing time.

|

Source code (NEW!) ArXiv (NEW!) |

Methodology and Visual Results |

Citation

@ARTICLE{Silva2021tpami,

author = {M. {Silva} and W. {Ramos} and M. {Campos} and E. R. {Nascimento}},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

title = {A Sparse Sampling-based framework for Semantic Fast-Forward of First-Person Videos},

year = {2021},

volume = {43},

number = {4},

pages = {1438-1444},

doi = {10.1109/TPAMI.2020.2983929},

ISBN = {0162-8828}

}

author = {M. {Silva} and W. {Ramos} and M. {Campos} and E. R. {Nascimento}},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

title = {A Sparse Sampling-based framework for Semantic Fast-Forward of First-Person Videos},

year = {2021},

volume = {43},

number = {4},

pages = {1438-1444},

doi = {10.1109/TPAMI.2020.2983929},

ISBN = {0162-8828}

}

Baselines

We compare the proposed methodology against the following methods:

- EgoSampling – Poleg et al., Egosampling: Fast-forward and stereo for egocentric videos, CVPR 2015.

- Microsoft Hyperlapse – Joshi et al., Real-time hyperlapse creation via optimal frame selection, ACM. Trans. Graph. 2015.

- Stabilized Semantic Fast-Forward (SSFF) – Silva et al., Towards semantic fast-forward and stabilized egocentric videos, EPIC@ECCV 2016.

- Multi-Importance Semantic Fast-Forward (MIFF) – Silva et al., Making a long story short: A Multi-Importance fast-forwarding egocentric videos with the emphasis on relevant objects, JVCI 2017.

- Sparse Adaptive Sampling (SAS) – Silva et al., A Weighted Sparse Sampling and Smoothing Frame Transition Approach for Semantic Fast-Forward First-Person Videos, CVPR 2018.

Datasets

We conducted the experimental evaluation using the following datasets:

- Semantic Dataset – Silva et al., Towards Semantic Fast-Forward and Stabilized Egocentric Videos, EPIC@ECCV 2016.

- Dataset of Multimodal Semantic Egocentric Videos (DoMSEV) – Silva et al., A Weighted Sparse Sampling and Smoothing Frame Transition Approach for Semantic Fast-Forward First-Person Videos, CVPR 2018.

Authors

Michel Melo da Silva

Researcher

Washington Luis de Souza Ramos

PhD Candidate

Mario F. M. Campos

Professor