A Shape-Aware Retargeting Approach to Transfer Human Motion and Appearance in Monocular Videos

International Journal of Computer Vision (IJCV)

Abstract

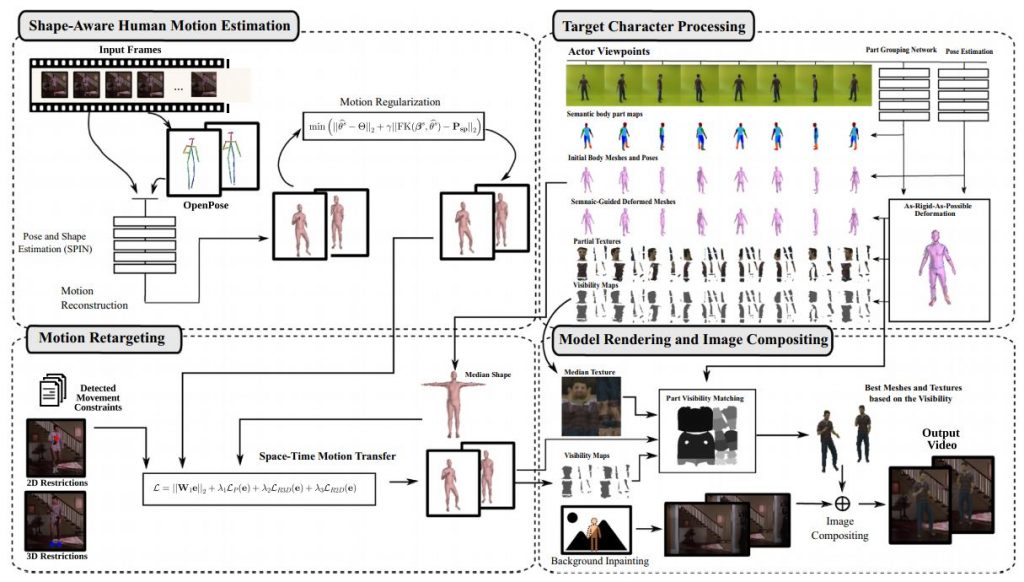

Transferring human motion and appearance between videos of human actors remains one of the key challenges in Computer Vision. Despite the advances from recent image-to-image translation approaches, there are several transferring contexts where most end-to-end learning-based retargeting methods still perform poorly. Transferring human appearance from one actor to another is only ensured when a strict setup has been complied, which is generally built considering their training regime’s specificities. In this work, we propose a shape-aware approach based on a hybrid image-based rendering technique that exhibits competitive visual retargeting quality compared to state-of-the-art neural rendering approaches. The formulation leverages the user body shape into the retargeting while considering physical constraints of the motion in 3D and the 2D image domain. We also present a new video retargeting benchmark dataset composed of different videos with annotated human motions to evaluate the task of synthesizing people’s videos, which can be used as a common base to improve tracking the progress in the field. The dataset and its evaluation protocols are designed to evaluate retargeting methods in more general and challenging conditions. Our method is validated in several experiments, comprising publicly available videos of actors with different shapes, motion types, and camera setups. The dataset and retargeting code are publicly available to the community at: https://www.verlab.dcc.ufmg.br/retargeting-motion.

|

Source code (NEW!) ArXiv (NEW!) |

Dataset[IJCV 2021] Retargeting dataset. Video retargeting benchmark dataset composed of different videos with annotated human motions to evaluate the task of synthesizing people’s videos. |

Citation

@Article{Gomes2021,

author={Gomes, Thiago L. and Martins, Renato and Ferreira, Jo{\~a}o and Azevedo, Rafael and Torres, Guilherme and Nascimento, Erickson R.},

title={A Shape-Aware Retargeting Approach to Transfer Human Motion and Appearance in Monocular Videos},

journal={International Journal of Computer Vision},

year={2021},

month={Apr},

day={29},

issn={1573-1405},

doi={10.1007/s11263-021-01471-x},

url={https://doi.org/10.1007/s11263-021-01471-x}

}

author={Gomes, Thiago L. and Martins, Renato and Ferreira, Jo{\~a}o and Azevedo, Rafael and Torres, Guilherme and Nascimento, Erickson R.},

title={A Shape-Aware Retargeting Approach to Transfer Human Motion and Appearance in Monocular Videos},

journal={International Journal of Computer Vision},

year={2021},

month={Apr},

day={29},

issn={1573-1405},

doi={10.1007/s11263-021-01471-x},

url={https://doi.org/10.1007/s11263-021-01471-x}

}

Authors

Thiago Luange Gomes

Researcher

Renato José Martins

Professor at Université de Bourgogne

João Pedro Moreira Ferreira

MSc Student

Rafael Augusto Vieira de Azevedo

MSc Student

Guilherme Alvarenga Torres

Undergraduate Student