Musical Hyperlapse: A Multimodal Approach to Accelerate First-Person Videos

2021 34th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI)

Abstract

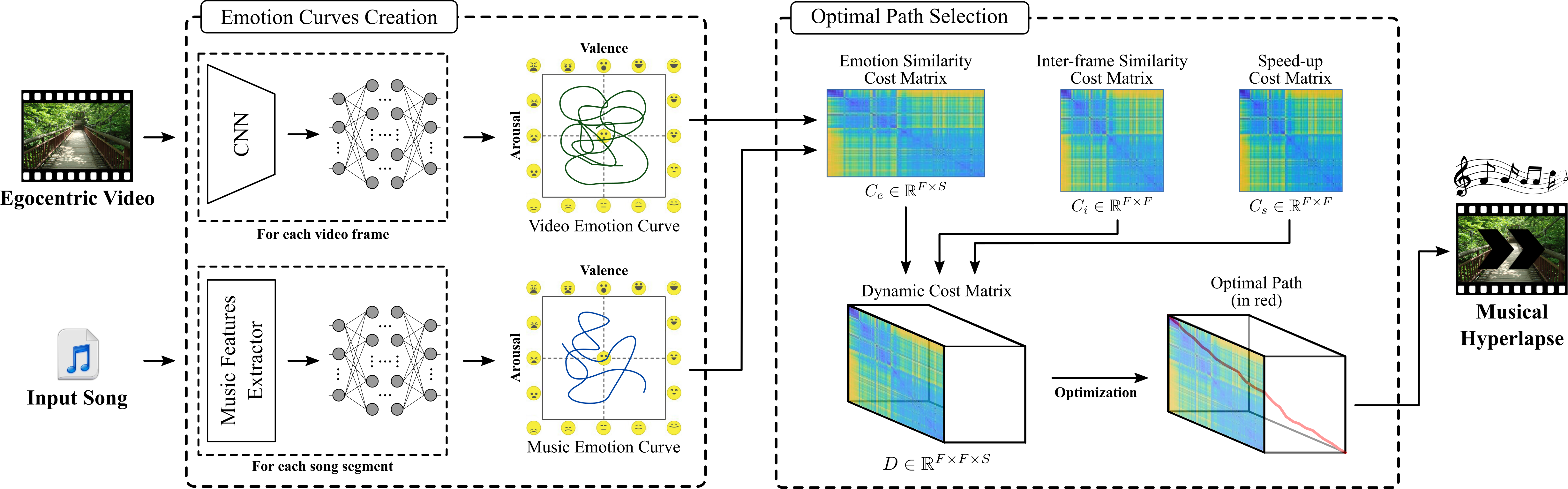

With the advance of technology and social media usage, the recording of first-person videos has become a widespread habit. These videos are usually very long and tiring to watch, bringing the need to speed-up them. Despite recent progress of fast-forward methods, they generally do not consider inserting background music in the videos, which could make them more enjoyable. This paper presents a new methodology that creates accelerated videos and includes the background music keeping the same emotion induced by visual and acoustic modalities. Our methodology is based on the automatic recognition of emotions induced by music and video contents and an optimization algorithm that maximizes the visual quality of the output video and seeks to match the similarity of the music and the video’s emotions. Quantitative results show that our method achieves the best performance in matching emotion similarity while also maintaining the visual quality of the output video when compared with other literature methods.

|

Code (Coming Soon!) |

Methodology and Visual Results |

Citation

@INPROCEEDINGS{Matos2021sibgrapi,

author={de Matos, Diognei and Ramos, Washington and Romanhol, Luiz and Nascimento, Erickson R.},

booktitle={2021 34th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI)},

title={Musical Hyperlapse: A Multimodal Approach to Accelerate First-Person Videos},

year={2021},

volume={},

number={},

pages={184-191},

doi={10.1109/SIBGRAPI54419.2021.00033}}

author={de Matos, Diognei and Ramos, Washington and Romanhol, Luiz and Nascimento, Erickson R.},

booktitle={2021 34th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI)},

title={Musical Hyperlapse: A Multimodal Approach to Accelerate First-Person Videos},

year={2021},

volume={},

number={},

pages={184-191},

doi={10.1109/SIBGRAPI54419.2021.00033}}

Baselines

We compare the proposed methodology against the following methods:

- Microsoft Hyperlapse (MSH) – Josh et al., Real-time hyperlapse creation via optimal frame selection. ACM Trans. Graph. 2015.

- Sparse Adaptive Sampling (SASv2) – Silva et al., A sparse sampling-based framework for semantic fast-forward of first-person videos, TPAMI 2021.

Datasets

We conducted the experimental evaluation using the following dataset:

- Musical Hyperlapse Dataset – Matos et al., Musical Hyperlapse: A Multimodal Approach to Accelerate First-Person Videos, SIBGRAPI 2021.

Authors

Diognei de Matos

Researcher

Washington Luis de Souza Ramos

PhD Candidate

Luiz Henrique Romanhol Ferreira

Undergraduate Student